The Boom in AI Tools: Opportunity or Security Nightmare?

AI is driving efficiency and innovation in business. But have you considered the security risks?

Many business leaders, eager to stay ahead, are deploying AI faster than security and compliance teams can keep up.

The result?

- Sensitive company data processed in ways that break compliance laws.

- AI cyber threats that businesses aren’t prepared for.

- Legal uncertainties as regulations scramble to keep up.

So, how do you embrace AI’s potential while staying secure and compliant?

Well, you can start by reading this guide!

By the end, you’ll understand the 4 biggest AI security threats, the laws you need to follow, and 5 simple steps to safeguard your business.

The Emerging AI Security Risks You Can’t Ignore

AI security threats aren’t just a “what if” problem.

Here’s 4 ways AI could be leaving your business exposed without you even knowing it.

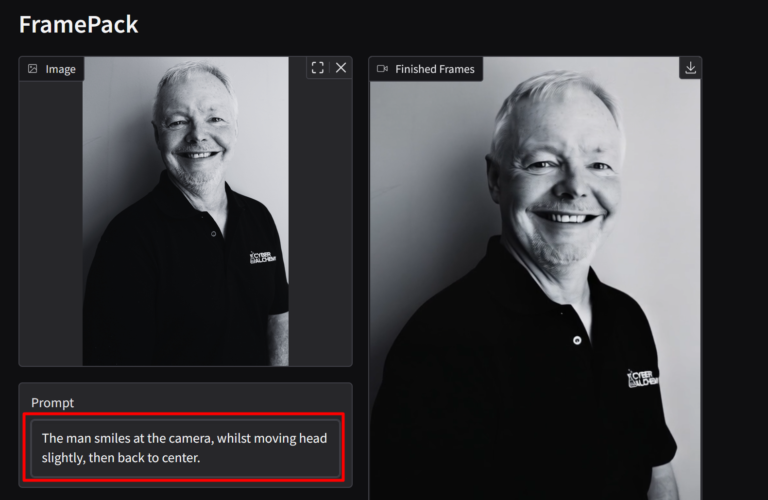

1. Shadow AI:

Imagine this:

One of your employees, eager to boost productivity, starts using a free AI-powered chatbot to summarise sensitive client reports.

Another team member feeds financial projections into an AI analytics tool they found online.

And neither of them tell the IT department.

This is called Shadow AI – when employees use AI tools without IT approval or oversight.

So, why is this a problem?

- You don’t know where that data is going. Is it stored? Is it shared? Who has access to it?

- Many AI tools don’t have built-in security, meaning sensitive business information could be leaked or misused.

- If a security breach happens, who is responsible? The employee? The company? The AI vendor? The truth is, it’s always the business that takes the hit.

2. AI-Powered Cyber Attacks

Hackers have always been a problem, but AI is giving them new, powerful weapons.

Cybercriminals are using AI to attack businesses faster, smarter, and with greater precision than ever before, such as:

Deepfake Scams That Make Fraud Easier

A CEO might get an urgent voice message from their CFO:

“Hey, can you authorise this payment ASAP? It’s urgent, £250,000 needs to go to this account by 5 PM.”

Except… the CFO never actually made the call.

AI-generated deepfake voices and videos can now mimic real people so convincingly that even close colleagues can’t tell the difference.

AI-Powered Phishing Attacks Are Trickier to Spot

We’ve all seen scam emails with obvious red flags: bad spelling, weird formatting, and generic greetings like “Dear Customer”.

AI fixes all of that.

Hackers now use AI to:

- Personalise phishing emails so they seem real.

- Mimic the writing style of your real colleagues.

- Automate attacks at scale, targeting thousands of businesses at once.

AI Can Exploit Weak Business Defences

Hackers are using AI to find vulnerabilities faster than ever.

Weak passwords, outdated systems, or poorly secured tools? AI-powered attacks can scan for and exploit them in seconds.

3. AI Models That Leak Sensitive Data (Without You Knowing)

AI systems learn from the data they process. But here’s the scary part: Where does that data go?

If you’re using an AI-powered tool to generate reports, write proposals, or analyse customer feedback, there’s a good chance that information is being stored somewhere.

In some cases, it could even be shared with third parties or used to train the AI itself.

For example: A customer service chatbot is often set up to learn from past interactions. Without safeguards, it could accidentally reveal personal details of previous customers to new users…

4. The Regulatory Grey Area

In the UK and EU, data protection laws like UK DPA 2018 strictly control how businesses handle personal, sensitive information.

And AI isn’t some magical, futuristic entity that operates outside the law…

It’s a tool – just like email, cloud storage, or your company’s CRM system. Like any other tool, you are responsible for how it handles data.

Ask yourself:

- Do you know where your AI tools store and process data?

- Have you checked whether they comply with UK data protection laws?

- Can you delete data from an AI system if a client requests it?

Upcoming UK regulations could also bring new penalties for businesses using AI irresponsibly.

If your AI tools aren’t properly assessed now, you could be caught off guard when new compliance rules take effect.

So, How Can Businesses Secure Their AI Strategies?

Step 1: Map Out AI

Before you can secure AI, you need to know where it’s hiding.

Many business owners assume they only use AI if they’ve “officially” implemented it – but in reality, it’s likely already in use somewhere.

Employees may be using AI-powered chatbots, automation tools, or analytics software without thinking twice about security.

Start with an AI audit:

- Make a list of every AI tool your business is using. Ask employees, check software subscriptions, and see what’s running behind the scenes.

- Find out what kind of data these tools are handling. Are they processing customer information? Financial records? Internal communications?

Note: Don’t forget your vendors! Third-party tools often use AI in the background, and if they’re not secure, your data could be at risk.

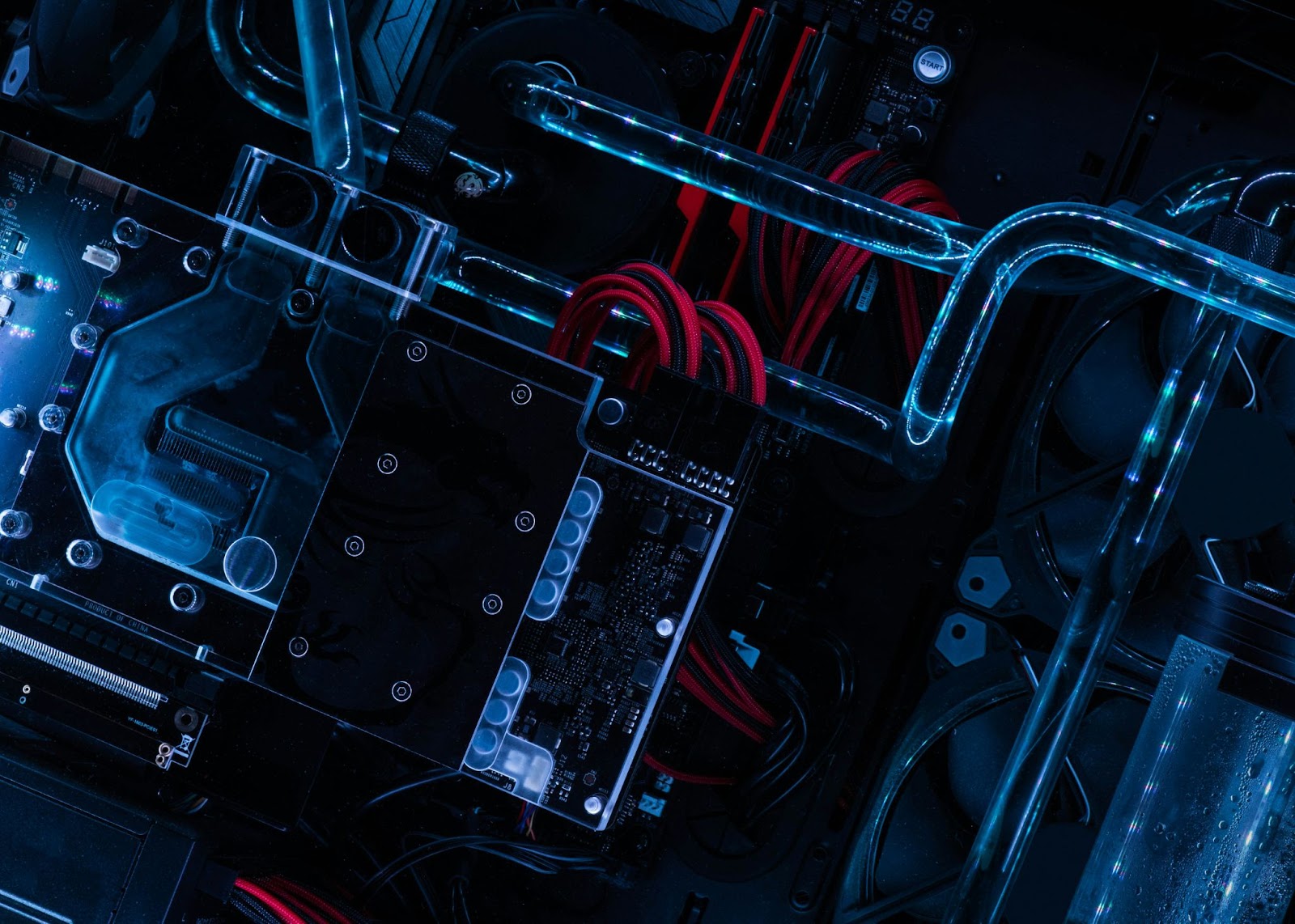

Step 2: Secure Your AI Data (Before It Falls Into the Wrong Hands)

AI is only as safe as the data it has access to.

If it can access customer details, financial records, or sensitive business information, you need to lock it down – or risk exposing it to leaks, cyber threats, or compliance violations.

You should:

- Encrypt sensitive information before using AI. This scrambles data so that even if hackers get their hands on it, they can’t read it.

- Limit who can access AI tools. Not every employee needs access to AI-powered analytics or customer data. Set permissions to prevent unauthorised access.

- Use multi-factor authentication (MFA). Require more than just a password to access AI tools. A simple extra security step can stop most cyber threats.

Fixing AI security is often as simple as locking the right doors.

Step 3: Set Up Strong Rules for Public Facing AI

AI chatbots, virtual assistants, and automated customer service tools can be incredibly useful – but they can also go wrong, fast.

If your AI is talking to customers on your behalf, you need to control what it says and how it handles information

You need to:

a) Limit what AI can say and do.

Don’t let it generate open-ended responses that could go rogue. Pre-set safe and approved responses instead.

b) Filter and monitor AI interactions.

Use content moderation tools to stop the AI from saying anything offensive, misleading, or legally risky.

c) Don’t let AI give legal, financial, or medical advice.

Even if AI sounds confident, it doesn’t always get things right. If it gives wrong advice, you’re responsible.

d) Block users from turning queries into commands.

Some people will try to trick AI into running unauthorised actions. Set up strict input validation so AI only responds with information and it doesn’t execute unexpected actions.

Step 4: Train Your Team

Even if you understand AI security, that won’t matter if your employees don’t.

AI tools are easy to use, which means people often don’t think twice before sharing sensitive information with them.

Your employees need to know:

- What data is safe to share with AI, and what’s not. (E.g. Never paste confidential contracts into ChatGPT!)

- How to recognise AI-related scams. (Phishing emails are now often AI-generated and harder to spot.)

- How to check if an AI tool is approved for business use. (If it’s not on the official list, they shouldn’t be using it.)

Even the best, most secure AI is risky in the wrong hands.

Step 5: Keep an Eye on Your AI

AI security isn’t a one-time fix – it needs constant attention.

New threats pop up all the time, and AI models themselves change constantly. So ongoing monitoring is essential to keep your business safe.

You should regularly:

- Review AI security. Check whether new AI tools have been introduced and whether existing ones still meet security standards.

- Test for vulnerabilities. Run security audits to make sure data isn’t leaking or being misused.

- Stay up to date with AI regulations. The UK is tightening AI laws, so what’s legal today might not be legal tomorrow.

Pro Tip: Penetration testing is a great way to check if your AI can be tricked, hacked, or exploited.

If you’re looking for ethical hackers, you’re in the right place.

At Cyber Alchemy, we offer a range of Penetration Testing Services to help you identify your businesses’ vulnerabilities before real hackers do.

AI Security: Your Competitive Advantage

Like it or not, AI is becoming a permanent part of how businesses operate.

And the businesses that get AI security right now will be the ones leading the market tomorrow.

Think about it:

- Customers trust companies that handle AI responsibly. Would you share personal info with a business that lets AI leak data?

- Legal and financial risks shrink when your AI tools follow compliance rules. No surprise lawsuits, no costly fines.

- And AI-powered cyber threats are only getting smarter. The stronger your defences, the harder it is for hackers to break in.

Safe AI is a selling point, not just a security measure.

So, What’s Next?

The risks I’ve talked about aren’t some distant problem, they’re here – right now.

The businesses that act now will enjoy all the benefits of AI without the security nightmares.

And those who wait?

They’ll be playing catch-up when regulations tighten and cyber threats grow more advanced.

Getting ahead now means less risk, fewer surprises, and a business that’s ready for the future. So, where does your business stand?

Are you ahead of the curve, or falling behind?

Security gaps can cost you. So, let’s make sure you don’t have any…

At Cyber Alchemy, our experts team can test, secure, and optimise your systems so you can focus on growing your business.

Contact us today to stay ahead of the risks!