We Deepfaked Bob (with his consent)

Deepfakes have been headline‑news for years, but 2025 marks the moment they crossed an important threshold: video deepfakes that need only a single still image as their seed. Thanks to recent research from Lvmin Zhang and colleagues at Stanford, the open‑source project FramePack can spin that solitary selfie into an entire moving clip, dissolving the last big technical barrier between amateurs and Hollywood‑grade fakery. (github.com).

What’s truly mind‑bending is the hardware footprint. FramePack will run on a mid‑range gaming card with 6 GB of VRAM — that’s every NVIDIA xx60‑class GPU since 2017 — turning bedroom PCs into miniature VFX studios. (tomshardware.com)

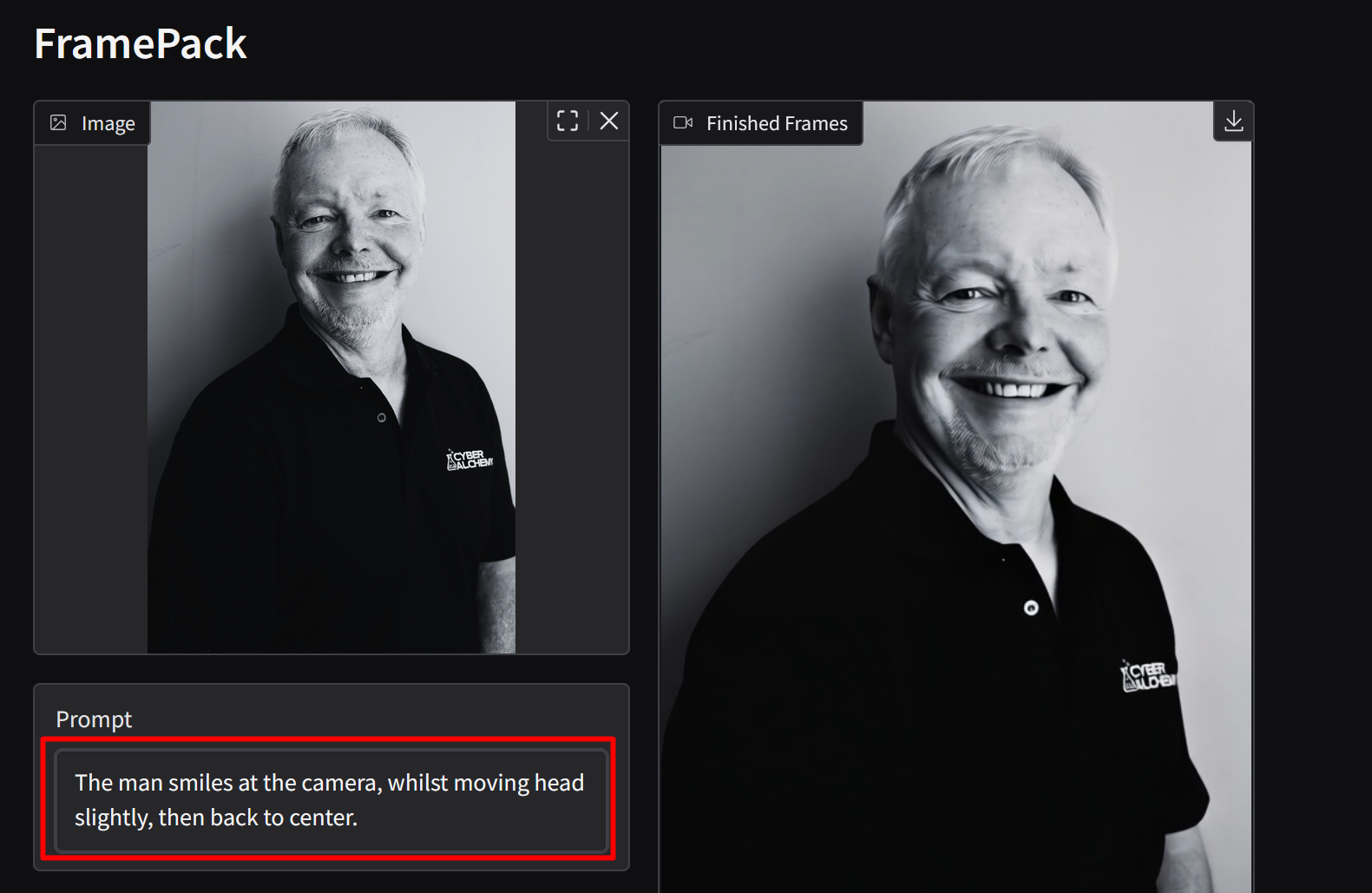

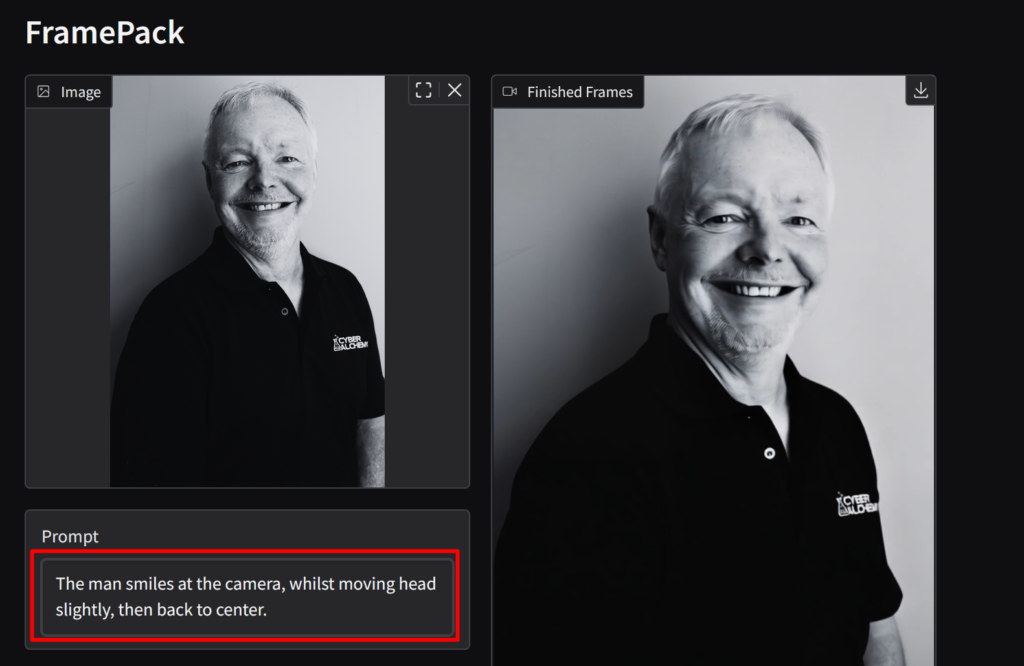

The Cyber Alchemy team were able to take this lovely Photo of Bob (Credits to Ali for the Photography)

And feed it into the tool – with a lovely prompt and generate several small videos of Bob.

Videos can be between 1 and 120 seconds long, however we must admit the longer the video goes on for the weirder it does get.

Here is Bob;

We use the prompt – The man turns to the left and back to the camera

Bob again;

This time we prompted – The man smiles and looks forward to the camera

For the Geeks: Under the Hood — How FramePack Works

- Context Packing – Instead of feeding the entire video history into the model each step, FramePack compresses prior frames into a fixed‑length latent context. Generation time stays (roughly) constant regardless of target duration. (github.com)

- bf16 Optimisation – Reduced‑precision tensors slash memory without brutal quality loss, squeezing the model into that 6 GB envelope. (Note: you’ll still want ≥ RTX 30‑series silicon for bf16 support.) (github.com)

- Progressive Preview – A Gradio GUI streams intermediate frames so you can abort misfires early and save GPU cycles.

Full install took us 20 minutes on an RTX 4080 workstation: clone the repo, grab the 13 B checkpoint, tick the “preserve 8 GB RAM” slider, and go brew coffee.

Why This Is Equal Parts Awesome and Terrifying

| Upside | Downside |

|---|---|

| Rapid prototyping for adverts, training, and entertainment | Weaponised misinformation (politics, scams) (ft.com) |

| Accessibility — no cloud fees, no data exports | Non‑consensual deepfake pornography (already rampant) (theguardian.com) |

| Democratized creativity for indie filmmakers and educators | Erosion of evidentiary trust in CCTV, body‑cam and news footage |

In short: the genie is way out of the bottle.

Whats so interesting and terrifying about this technology is it can be done on a GPU with 6GB of VRAM, for reference thats nearly every GPU which was made in the last 8 years. So in a way Deepfake videos have been democracised. This of course comes with several terrifying use cases, deepfake politicians, deepfake pornography… etc

The Law Is (Slowly) Waking Up

- UK Online Safety Act (2023) & Sexual Offences Act amendments — Distributing explicit deepfakes is now a criminal offence in the UK. (gov.uk, theguardian.com)

- EU AI Act (2024) — Article 52(3) mandates clear labelling of synthetic media in the EU. (herbertsmithfreehills.com)

- Proposed UK AI Bill (2025 draft) — Would place liability on developers of “nudification” apps and classify deepfake sexual abuse as violence against women and girls. (theguardian.com)

Enforcement, however, is another matter; code travels faster than case law.

Can We Detect or Defeat Deepfakes?

- Watermarking Standards – A multi‑stakeholder working group led by ITU and IEC is mapping standards for AI watermarking and authenticity metadata. (aiforgood.itu.int, etech.iec.ch)

- Proactive Identity Watermarks – Embedding imperceptible signals at capture time so any tampering degrades the watermark and raises a red flag. (openaccess.thecvf.com)

- Forensic AI Detectors – CNNs that spot artefacts in frequency space currently score > 80 % on known datasets, but degrade rapidly when the underlying generator changes. (FramePack clips already fool several 2024 detectors in our lab tests.)

- Cryptographic Authenticity Chains – Initiatives such as the Coalition for Content Provenance and Authenticity (C2PA) push camera‑to‑cloud signatures, but adoption is patchy.

Bottom line: Detection is a cat‑and‑mouse game. Assume some fakes will slip through.

Defensive Playbook for Organisations

- Threat Modelling – Identify which business processes could be harmed by synthetic video (CEO fraud, reputational hoaxes, evidence tampering).

- Out‑of‑Band Verification – Establish secondary channels (phone calls, in‑person checks) for any high‑stakes video or voice request.

- Media Hygiene Training – Teach staff and customers the tell‑tale signs: inconsistent lighting, mouth‑beats off‑sync, unrealistic eye‑blinks, metadata anomalies.

- Watermark at Source – Use cameras or software that embed C2PA hashes; reject footage lacking provenance.

- Incident Response – Prepare takedown and PR playbooks; speed is everything once a fake goes viral.

Where Do We Go From Here?

Synthetic video is now as easy as typing a prompt. That’s equal parts creative rocket‑fuel and societal powder‑keg. Cyber Alchemy’s stance is clear:

We share, because attackers already know. Only by lifting the curtain can we harden our defences and educate the public.

Have questions, want a live demo, or need help assessing your exposure? Drop us a line — we love talking shop (and simulation theory) over coffee.